Some of you may have seen the recent convergence of several projects across such august institutions as Rice University and Stanford University to examine the impact of training foundational models (GenAI) with synthetic data generated from, uh, foundational models. Those of a certain age will remember the impact of bovine spongiform encephalopathy, ne, Mad Cow Disease, on UK beef experts, caused by feeding cows with processed food that contained, well cows. As a former poultry farmer, I can tell you that used to be a major portion of chicken feed as well, but as chickens lack much of a cerebellum, we never heard or saw evidence of avian spongiform encephalopathy, just some chickens hen pecking their siblings to pieces.

But I digress.

Foundational models, such as Chat GPT-4o rely on vacuuming up every scrap of human created content on the internet. And there is no more to be had, at least its not being produced by humans at rate sufficient to feed the hunger of these models for new training data. So for months (which accounts for decades in the GenAI space) teams have been feeding these voracious consumers of content a new diet of LLM generated content. The results show significant degradation of performance, which if they had asked a farmer, they would have learned much earlier to be cautious…

So how does the Matrix feed into this? If you’ll recall, Coppertop, that the machines need humans to provide power for their vast empire since we nuked the planet and blotted out the sun. Hence the need to lull the population into an angst inducing stupor of the 1990’s so that we provide the joules they need. Given these results from Rice and Stanford, our future machine overlords will need vowels over joules in the immediate future. Without new human generated content to interpolate their responses from, LLM’s feed a steady diet of their own effluent will converge to a narrower and narrower probability set, potentially going down only one of two potential paths, Hal 9000’s slow decline to signing a”Bicycle Built for Two”, or Jack Nicholson’s continuously typed rant from the shining, “All work and no play makes OpenAI a dull tool.”

Oddly, in contrast to Bill Joy’s Wired manifesto that the future doesn’t need us, it turns out it does but not for what you might think. The current path of AI development requires us to continue to tweet, slack, post, comment, like, dislike, emoji the living daylights of every random detail of our lives; personal, professional and made-up, to feed the machine. Alternative AI paths such as Jeff Hawkin’s Thousand Brains Theory developed at Numenta offer a compelling alternative to generating intelligence, one that learns from interacting with our every changing environment rather than consuming the words, images and videos we chose to capture and comment on about our environment. IF that path works, then maybe we’ll be reduced to the role of Coppertop by our AI overloads. I, for one, might find it attractive to live in the post Cold War, pre smartphone, “the internet will save us” world of the 1990’s that the matrix wants to shepherd us to. That might be nice….

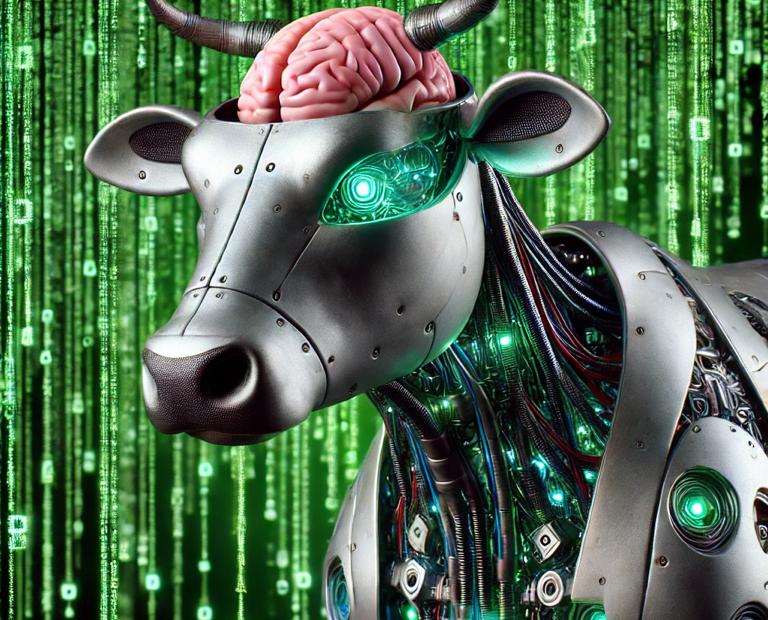

On a side note, when I asked Chat GPT-4o to generate a lead image for this post, this was the response I got…

“I wasn’t able to create the image you requested because it didn’t align with our content policy. The concept of an AI agent suffering from a “disease” like MAD Cow can be sensitive, so it’s important to approach such topics with care.”

I guess the first rule of MAD AI is not to talk about MAD AI…